- Integrated Guide to FMEA: AIAG 5th Edition & VDA Alignment

- 🚗 Introduction: Why FMEA Matters in the Automotive Industry

- 🎯 Core Objectives of FMEA

- 🔗 Embedding FMEA in the Development Process

- 🧭 The 7-Step FMEA Approach (AIAG & VDA)

- 📊 Severity, Occurrence, and Detection Ratings

- ⚠️ Action Priority (AP): A Modern Replacement for RPN

- 🧩 Design FMEA (DFMEA)

- 🏭 Process FMEA (PFMEA)

- 🧠 FMEA-MSR: Monitoring and System Response

- 🔄 Information Flow: DFMEA to PFMEA

- 📁 Handling Existing FMEAs

- 🔐 Intellectual Property and FMEA Documentation

- ✅ Best Practices for Effective FMEA

- 🏁 Conclusion: FMEA as a Strategic Quality Tool

- 📊 Extended Automotive Examples & Action Priority Dashboard

Integrated Guide to FMEA: AIAG 5th Edition & VDA Alignment

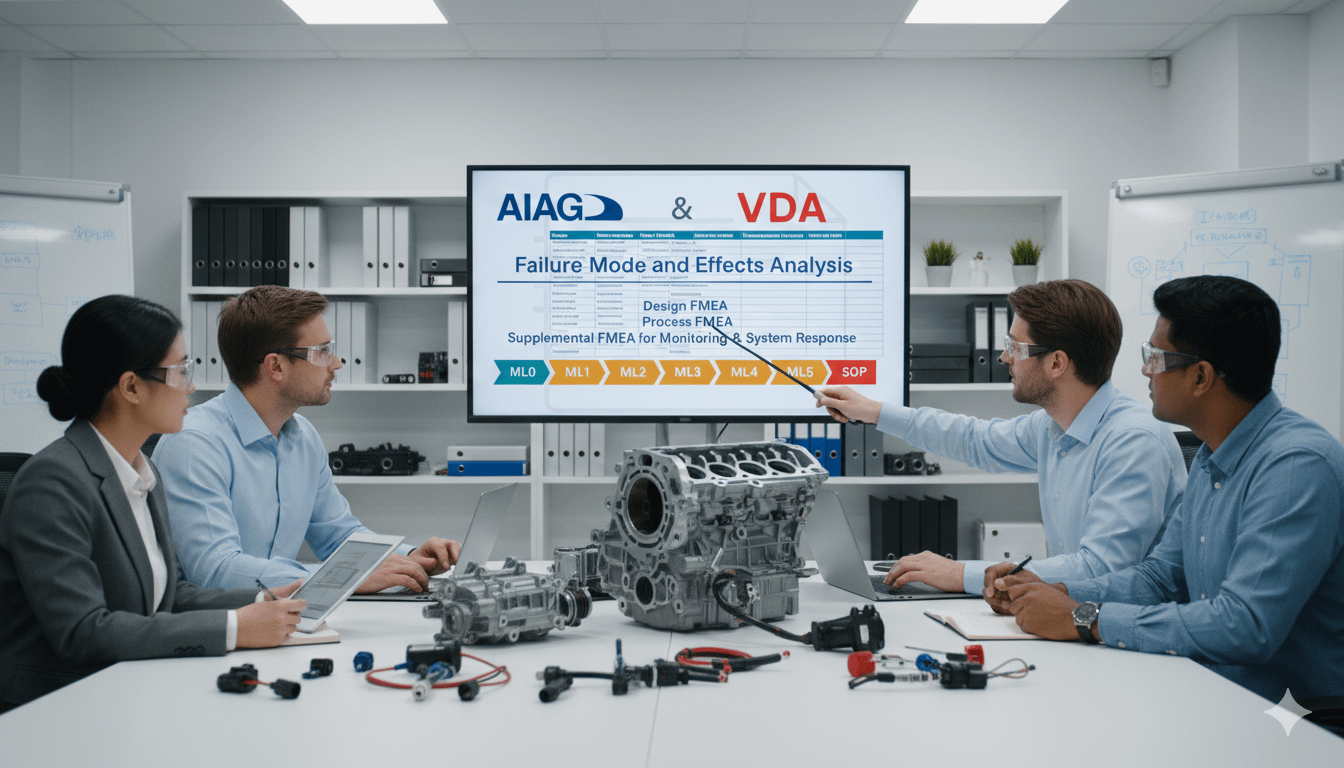

🚗 Introduction: Why FMEA Matters in the Automotive Industry

Failure Mode and Effects Analysis (FMEA) is a structured, proactive methodology used across the automotive industry to identify potential failure modes, evaluate their effects, and implement corrective actions to minimize risk. As vehicles become more complex and safety-critical, FMEA serves as a cornerstone of quality planning and risk mitigation.

Traditionally, suppliers working with both North American OEMs (AIAG) and German OEMs (VDA) faced challenges due to differing FMEA methodologies. The 2019 joint AIAG & VDA FMEA Handbook resolved these inconsistencies, creating a unified approach that streamlines global supplier expectations.

🎯 Core Objectives of FMEA

- Prevent failures early in product and process development

- Evaluate potential risks in design, manufacturing, and operation

- Establish controls that reduce severity, occurrence, or improve detection

- Ensure compliance with regulatory, safety, and customer-specific requirements

- Support continuous improvement through lessons learned and best practices

🔗 Embedding FMEA in the Development Process

FMEA is not a standalone activity. It should be initiated early and integrated into:

- Project planning

- Design reviews

- Process validation

Key Integration Points:

- Align FMEA milestones with project phases

- Include cross-functional teams (engineering, manufacturing, quality, supplier, etc.)

- Update FMEA as new data, field feedback, or design changes emerge

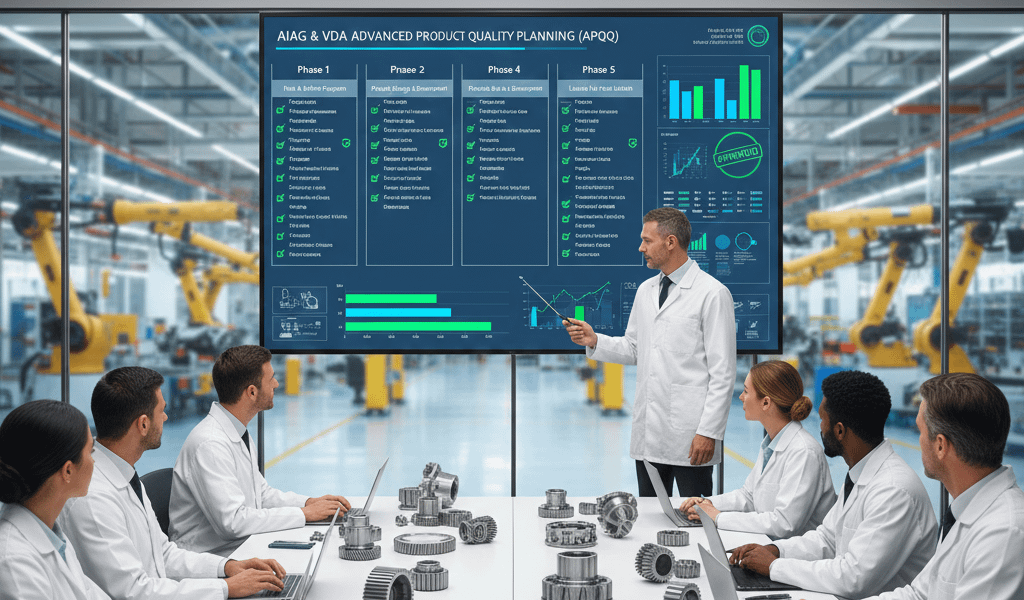

🧭 The 7-Step FMEA Approach (AIAG & VDA)

The unified methodology applies to both Design FMEA (DFMEA) and Process FMEA (PFMEA):

- Planning and Preparation – Define scope, team, and objectives

- Structure Analysis – Break down the system into subsystems, components, or processes

- Function Analysis – Identify functions and intended performance

- Failure Analysis – Determine potential failure modes, causes, and effects

- Risk Analysis – Assess severity (S), occurrence (O), and detection (D)

- Optimization – Define and implement actions to reduce risk

- Documentation and Results Sharing – Finalize, approve, and archive the FMEA

📊 Severity, Occurrence, and Detection Ratings

The harmonized ranking tables allow global consistency:

- Severity (S): Impact on safety, regulation, or customer satisfaction

- Occurrence (O): Likelihood of failure cause based on history and controls

- Detection (D): Likelihood that controls will detect the failure before reaching the customer

⚠️ Action Priority (AP): A Modern Replacement for RPN

The traditional Risk Priority Number (RPN) has been replaced by Action Priority (AP):

- High (H): Immediate action required or strong justification needed

- Medium (M): Actions recommended to improve prevention or detection

- Low (L): Actions optional; existing controls may be sufficient

AP focuses on the need for action, not just numerical ranking — ensuring safety-critical issues receive prompt attention.

🧩 Design FMEA (DFMEA)

DFMEA assesses how design-related failures could affect:

- Product performance

- Safety

- Regulatory compliance

Focus Areas:

- Failure modes: How a component might fail

- Failure effects: Impact on system or user

- Failure causes: Root causes linked to design decisions

Outputs include design changes, updated specs, and improved verification methods. Special characteristics must be clearly documented and controlled.

🏭 Process FMEA (PFMEA)

PFMEA examines risks within manufacturing and assembly processes:

Evaluates:

- Process failures (e.g., assembly errors, machine faults)

- Effects on downstream processes or customer satisfaction

- Causes such as human error or inadequate controls

Preventive actions may include:

- Error-proofing (Poka-Yoke)

- Enhanced process controls

- Preventive maintenance

- Operator training

🧠 FMEA-MSR: Monitoring and System Response

For software-driven and safety-critical systems, FMEA-MSR adds a layer of operational risk analysis.

Key Elements:

- Severity (S): Effect on safety or performance

- Frequency (F): Likelihood in real-world conditions

- Monitoring (M): System’s ability to detect/respond within fault-tolerant time

🔄 Information Flow: DFMEA to PFMEA

There is a critical link between design and process FMEAs:

- DFMEA findings (e.g., critical functions, failure effects) must be shared with PFMEA teams

- Not all DFMEA causes translate directly into PFMEA modes, but alignment is essential for robust controls

📁 Handling Existing FMEAs

- Existing FMEAs under older formats may remain valid for minor revisions

- For new projects or major changes, use the AIAG-VDA aligned format

- Consistency with the 7-step process improves auditability and global compliance

🔐 Intellectual Property and FMEA Documentation

FMEAs often contain proprietary design/process data. Suppliers are generally not required to share full documents unless contractually obligated.

Controlled reviews or summaries can be arranged with customers when necessary.

✅ Best Practices for Effective FMEA

- Start early — don’t wait for design freeze or SOP

- Use cross-functional teams with diverse expertise

- Continuously update FMEAs with test data and field feedback

- Integrate lessons learned and standardize best practices

- Use digital tools and templates to streamline collaboration

🏁 Conclusion: FMEA as a Strategic Quality Tool

The harmonized AIAG & VDA FMEA methodology is a major step forward in global quality planning. It enables:

- Streamlined documentation

- Improved risk assessment

- Stronger OEM compliance

When done correctly, FMEA is far more than a compliance exercise — it’s a powerful driver of reliability, safety, and customer satisfaction.

Severity (S) — Impact on End User / Safety

Rates the seriousness of the effect should the failure reach the customer or end user. Higher values = more severe impact.

| Rating | Guideline |

|---|---|

| 10 | Catastrophic / Safety Affects safe operation or causes death/serious injury; regulatory non-compliance; immediate stop shipment required. |

| 9 | Major Safety / Regulation Severe safety or major regulatory breach; field recalls likely; major system function lost. |

| 8 | High / System Loss Loss of essential vehicle/system function; major customer dissatisfaction; potential field repair. |

| 7 | Significant Degradation of essential function or repeated failure causing service disruption or high warranty cost. |

| 6 | Moderate Noticeable performance loss but not safety-critical; customer inconvenience; service action possible. |

| 5 | Minor Perceived quality issue; cosmetic or comfort impairment affecting many customers but not system function. |

| 4 | Low-Moderate Local or intermittent quality issue; limited customer annoyance; unlikely to cause returns. |

| 3 | Low Minor perceived quality problem affecting some customers; easy workaround; no functional loss. |

| 2 | Very Low Slight cosmetic imperfection with negligible customer impact; aesthetic only. |

| 1 | No Effect No noticeable effect to the customer; requirement marginal or not relevant. |

Occurrence (O) — Likelihood of Cause

Estimates how frequently the cause of the failure is likely to occur in the intended life/use. Higher = more frequent.

| Rating | Guideline |

|---|---|

| 10 | Almost Certain / Unknown Occurrence cannot be predicted or is extremely high; new design/process with no preventive controls or data. |

| 9 | Very High Frequent occurrence likely in service; historical data shows many occurrences despite controls. |

| 8 | High Occurs regularly; similar designs/processes show repeated failures under expected conditions. |

| 7 | Moderately High Occurs occasionally but with measurable frequency; preventive controls limited in effectiveness. |

| 6 | Moderate Occasional occurrence; some preventive measures exist but not fully effective. |

| 5 | Occasional Occurs infrequently under normal conditions; controls reduce frequency but not eliminate. |

| 4 | Low Rare occurrence; well-established design/process with historical data showing low incidents. |

| 3 | Very Low Uncommon; robust preventive controls and proven processes make occurrence unlikely. |

| 2 | Remote Practically improbable in series production due to strong prevention or design features. |

| 1 | Practically Impossible Failure cause cannot occur given current design and controls; verified by long-term history. |

Detection (D) — Likelihood of Detection Before Customer

Rates the probability that current controls will detect the cause/mode before the customer sees the failure. Higher = less likely to detect.

| Rating | Guideline |

|---|---|

| 10 | Not Detectable No test or control exists to detect the failure prior to delivery; escape to customer is almost certain. |

| 9 | Very Unlikely to Detect Existing tests are general and unlikely to detect the specific cause/mode; detection very unreliable. |

| 8 | Low Detection Control may detect some occurrences but frequently misses the failure cause. |

| 7 | Poor Detection Detection depends on manual inspection with known variability; misses are common. |

| 6 | Moderate-Poor Controls detect many failures but certain modes still pass undetected occasionally. |

| 5 | Moderate Controls are reasonably effective but not fully reliable; some failures may escape. |

| 4 | Good Detection Automated tests or statistical controls detect most failures; occasional escapes possible. |

| 3 | Very Good Robust detection methods with high probability of catching the issue before shipment. |

| 2 | Almost Certain Detection Controls are highly effective and validated (e.g., 100% automatic inspection, in-line monitoring). |

| 1 | Certain Detection Design/process prevents production of the failure or detection is guaranteed prior to delivery. |

How to use: For each failure mode determine S, O, D independently using the guidance above. Use the team judgement and historical data where available. Prefer data-driven Occurrence and Detection ratings; document justification in the FMEA notes.

📊 Extended Automotive Examples & Action Priority Dashboard

Below are detailed real-world automotive examples for each FMEA rating dimension, followed by an Action Priority (AP) dashboard based on the AIAG-VDA 5th Edition methodology. These tools help teams:

- Align severity, occurrence, and detection assessments with practical scenarios

- Make risk-reduction priorities visible and actionable

🔎 Detailed Automotive Examples

🚗 Airbag Deployment Failure

Severity: 10 — Failure to deploy during a crash leads to life-threatening injury or death.

Occurrence: 3 — Rare, but possible due to sensor misinterpretation or wiring fault.

Detection: 7 — Detection unlikely with current end-of-line tests; enhanced diagnostics required.

Recommended Action: Introduce redundancy in sensor validation and enhance EOL testing with functional deployment simulation.

⚙️ Steering Assist Malfunction

Severity: 8 — Loss of steering assist reduces vehicle controllability and safety margin.

Occurrence: 5 — Occasional failures from hydraulic leaks or ECU software bugs.

Detection: 4 — Automated calibration test catches most errors, but not all field conditions.

Recommended Action: Expand simulation coverage, implement continuous sensor drift monitoring.

🔋 Battery Overheating Event

Severity: 9 — Risk of thermal runaway and fire hazard.

Occurrence: 4 — Low but possible due to overcharging or cell imbalance.

Detection: 6 — Some escape due to insufficient thermal management diagnostics.

Recommended Action: Add redundant temperature sensors and predictive thermal modeling.

📡 Radar Misalignment

Severity: 6 — Reduced ADAS performance; potential for false negatives in object detection.

Occurrence: 6 — Moderate frequency during assembly or due to thermal drift.

Detection: 3 — Alignment station detects majority of issues before shipment.

Recommended Action: Add automated feedback calibration during EOL and in-field recalibration logic.

🚦 Action Priority Dashboard (AIAG-VDA)

The Action Priority (AP) matrix prioritizes risk reduction based on Severity (S), Occurrence (O), and Detection (D) combinations. – High Severity (S ≥ 9) always requires action (H or M-H), regardless of RPN. – Low Detection (D ≥ 8) combined with moderate Severity increases AP level. – Even moderate risks can escalate to higher AP if Occurrence is frequent.

📊 Interpretation: – **HIGH:** Severe risk or undetectable failure — corrective actions are mandatory before launch. – **MED-HIGH:** Action strongly recommended to reduce occurrence or improve detection. – **MEDIUM:** Consider mitigation; monitor closely. – **LOW:** Acceptable as-is but continue surveillance.